Introducing Prompt Engineering (Unlock the Power of GPT)

One can generate creative, accurate and relevant outputs with effective prompt engineering. Unlock the limitless potential of generative AI by learning how to use the right prompts.

If you have a computer and a stable high-speed internet connection at home chances are you have messed around with DALLE or ChatGPT at some point.

Chances are you used all these tools for fun or in the eleventh hour of submission. Either the deadline to submit the essay was very close or you just wanted to treat yourself to some exciting imagery. Whatever it was, we are not judging.

Technology is here to make our lives easier and it is only acceptable we use it to the best of our abilities.

Generative AI tools such as DALLE, Midjourney, ChatGPT, and Google’s BARD are bringing about a revolution in the way we work and get things done.

Text serves as the closest and most prevalent interaction channel between humans and these tools. It is not just these tools that accept instructions in the form of text. Calculators or modern-day computers also accept inputs or codes respectively in the form of text to get things done.

Similar is the case with large language models, and text-to-image generators, such as ChatGPT and DALLE. They are very reactive to nuances in the prompt they receive.

And this is where Prompt Engineering comes into the picture.

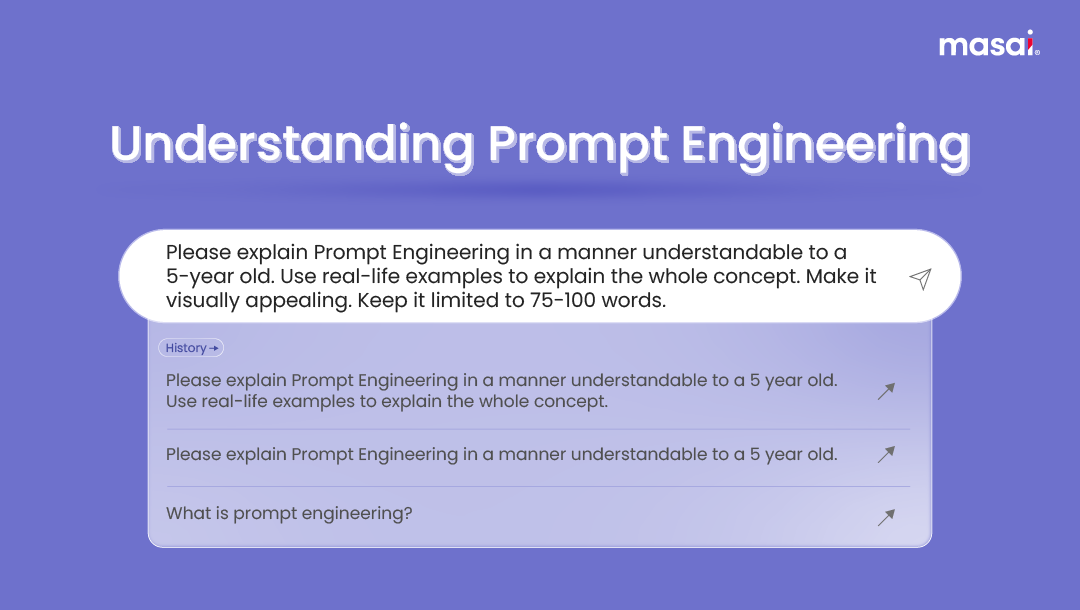

What is Prompt Engineering?

Prompt Engineering is the process of designing effective prompts to generate accurate and relevant outputs. Like Albert Einstein’s IQ, an AI model’s IQ can be improved by giving it the right prompts.

Students in school understand a concept from their favorite teacher or maybe their peers. This happens because they explain concepts in terms that are relatable and make sense. In the same manner, an AI model would understand the context of information in a better fashion by a good prompt engineer.

For example, if we ask ChatGPT to write an essay on SEO, this is what we would get-

Now let’s provide a more detailed prompt.

Let us try - “Write an essay on SEO and include the importance of it in blogs”.

We notice that the response generated talks about SEO first and then lays out its importance.

So if you want to use AI to its full potential, you need to make sure you are engineering prompts, not writing them.

Importance of Prompts

Now, we will understand the importance of prompts and how it is processed to provide desired responses to the user.

Let’s start with an example. When asked to compare Google’s Android and Apple’s iOS, ChatGPT breaks down both operating systems on various parameters.

Our intent up until this point is purely research.

Now, let’s say we want to finalize the mobile operating system we should go for. Here, we would need to follow this up with another prompt. A prompt as similar as “Which one is better?”.

The response thus generated is detailed and helps us reach a decision.

It is interesting to note that the second prompt did not require any additional information regarding the things under comparison. ChatGPT derived context out of the previous prompt. To provide a pivotal decision-making response, ChatGPT required an extra prompt.

From this example, it can be concluded that ChatGPT processes information that is limited only to what the user asked for (prompts).

Large language models like ChatGPT require prompts to generate responses. They do not have the ability to get into our brains and figure out what we actually want.

Thus, in order to derive responses that actually make our lives easier, it is essential in crafting effective prompts. Effective prompts would help Large Language Models (LLMs) determine what is it exactly the users want.

This is how Prompt Engineering as a discipline makes a difference. Prompt Engineering helps reduce the number of iterations a user would require to get the desired response and also makes it easier for the model to process information.

How does a Large Language Model interpret these prompts? Well, the answer is Natural Language Programming.

NLP plays a significant role between the computer and the human, It is the CPU of the language model.

It is the branch of AI that involves enables computers to understand text and spoken words in the way human beings can. NLP combines statistical, machine, and deep learning models to do so.

Thus the role of prompts is very important in helping models give out an output that is aligned with the context of the user.

Types of Prompts

Several types of prompts can be used in NLP. Here is a list-

- Text-based prompts: These prompts are typically in the form of written text and provide additional context and direction to the model.

- Keyword-based prompts: These prompts are designed to identify specific keywords or phrases that are relevant to the task at hand.

- Rule-based prompts: These prompts are based on a set of rules that are used to guide the model towards specific outputs.

- Generative prompts: These prompts are designed to generate new text based on a specific set of guidelines.

Key Considerations when designing prompts

It is important to consider key factors while designing prompts as it obstructs the context of information to be given to get an effective output.

- Relevance: The prompts should be relevant to the user’s intent of output and contextual.

- Clarity: The prompts should be clear and straight to the point and avoid any misinterpretation.

- Diversity: The prompts should be contextual giving the right inputs to get effective output.

- Ethical considerations: The prompts should be designed in such a way they are not biased and discriminative.

We need to understand that the NLPs would provide information that is aligned with context and we need to make sure that we are giving prompts in such a way that we consider it to be our friend and you are explaining about a movie you watched recently.

Applications of Prompt Engineering

If we look at the ways of using prompts in certain things, there are a few applications that have the ability to improve the accuracy and efficiency of a wide range of NLP tasks.

Text Classification

Text Classification is an essential task in NLP with practical applications.

It is like sorting your toys into different boxes. You might have a box for your cars, a box for your dolls, and a box for your blocks. Text classification does the same thing but with words!

It reads words and puts them into boxes based on what they're talking about!

As a result of text classification, users are able to assign a label to a piece of information to fully understand the context of the topic. Here’s an example-

Question Answering

It is the task of automatically answering questions that are asked in natural language. The questions can be simple, like “What is the Capital of India?” or “Who is the Prime Minister of India?”. These are factual questions that have a direct answer and can be asked to the model in a simple question format.

The questions can also be complex when it requires reasoning and assumption like if the user asks “What would happen if the ozone layer is removed?”. This requires the model to get the keywords and give factual outputs.

It can be a challenging task when the prompts given are not specific to the context. It can result in inaccurate outputs. With well-crafted prompts, the model would have the right keywords and concepts. This would shift its focus on the importance of the text and generate accurate answers.

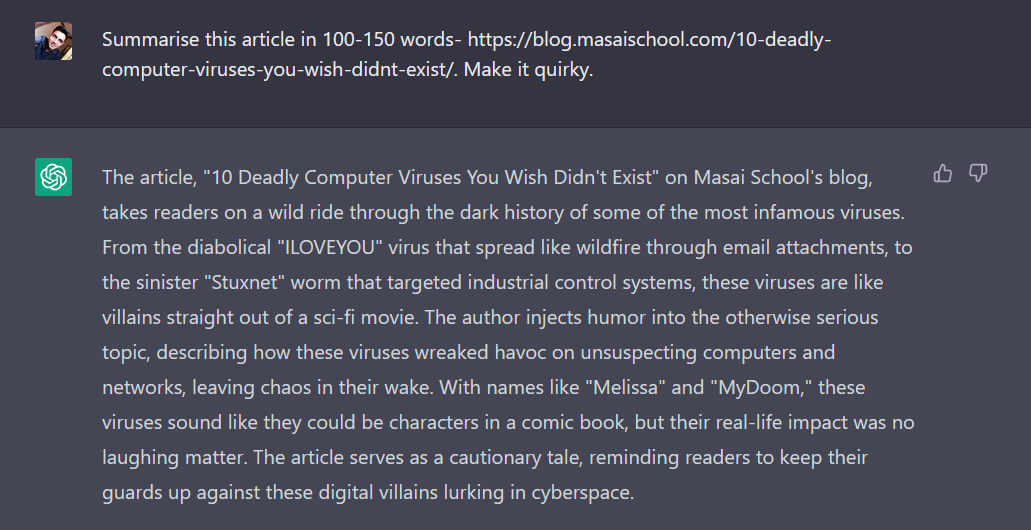

Summarization

Ever tried using ChatGPT to write an essay for you by giving a huge chunk of information to it and then asking it to summarize the whole thing into a limited amount of words?

Summarization is the task of condensing large pieces of information into smaller pieces without losing important details. Humans find it challenging and time-consuming. AI models can help but also struggle to select contextual information.

Prompt engineering can improve the performance of summarization models by guiding them towards the most crucial details. It helps the model identify the focus of what needs to be summarized.

Dialogue Systems

You might’ve come across chatbots when going to the customer support of Amazon or an assistant like Siri or Alexa. You must have also had conversations where it gives you automated responses for specific words or phrases.

These are AI-powered chatbots. These are in their truest sense, dialogue systems that enable human-like discussions by automatically responding to certain phrases or words.

By designing prompts that direct the model to offer relevant information and recognize the user's purpose, prompt engineering can be used to enhance dialogue systems and increase their accuracy. This strategy can assist in lowering the time and effort needed to address customer complaints, thus increasing customer happiness.

Large language models are trained on a large amount of data that is out there on the web. A lot of this data may or may not have been validated or proofread. Thus it becomes important to standardize the process of crafting prompts in order to derive accurate and efficient responses.

Challenges in Prompt Engineering

Prompt engineering, while a powerful technique for enhancing the capabilities of GPT models, is not without its challenges. Addressing these challenges is crucial to ensuring AI-generated content is high-quality, unbiased, and useful for various applications. Two significant challenges in prompt engineering are bias and fairness and overfitting and generalization.

Bias and Fairness:

A pressing concern in prompt engineering is addressing bias in AI-generated content. Bias can be introduced at various stages, including the model's training data, the prompts provided, and the model's architecture. Left unchecked, AI systems can perpetuate existing biases, resulting in unfair or discriminatory outcomes.

To combat bias, it's essential to begin with diverse and representative training data. Prompt designers must also be vigilant about the language and phrasing used in prompts, as biased or leading prompts can lead to biased responses. Regular review and adjustment of prompts to promote fairness are vital.

Additionally, continuous monitoring and evaluation are necessary to detect and mitigate bias. This involves collecting user feedback and using fairness-aware metrics to assess the model's performance across different demographic groups. Addressing bias is an ongoing process that demands a commitment to fairness.

Overfitting and Generalization:

Overfitting occurs when prompts are overly tailored to specific tasks or datasets, resulting in models excelling in narrow contexts but struggling to generalize. On the other hand, underfitting may yield prompts that are too generic, failing to yield meaningful results.

Achieving the right balance between specificity and generality in prompt design is a complex task. This challenge is particularly relevant in fine-tuning, where prompts must transfer knowledge from pre-trained models to specific applications.

Researchers and practitioners are actively exploring techniques to address overfitting and enhance generalization. These include developing prompt templates adaptable to different tasks and employing transfer learning strategies to improve adaptability.

Robustness testing is also essential to ensure prompt-engineered models perform reliably across various scenarios. By evaluating models' responses to a range of prompts and inputs, we can identify areas prone to overfitting or generalization issues and make necessary refinements.

Future Trends in Prompt Engineering

Prompt engineering's future is shaped by several exciting trends that promise to expand AI models' capabilities and make them more versatile and user-friendly.

Multimodal Prompts:

A promising trend is the integration of multimodal prompts. Traditional prompt engineering relies primarily on text-based instructions, but multimodal prompts incorporate both text and visual cues. This approach provides users with more context and guidance, enhancing models' ability to understand and generate content.

In applications involving images, videos, and visual content, multimodal prompts are invaluable. For instance, in image captioning tasks, a multimodal prompt might combine a textual description with an image, resulting in captions that are not only descriptive but visually accurate.

Interactive Prompts:

Another exciting development is the rise of interactive prompts. These prompts enable users to engage in a real-time dialogue with AI models, offering feedback and guidance. This dynamic interaction allows for more precise and context-aware responses, making AI systems more user-friendly and adaptable.

Interactive prompts empower users to iteratively refine their queries based on the model's responses, leading to more accurate and tailored results. This trend is particularly valuable in applications like virtual assistants, customer support chatbots, and creative content generation.

Best Practices for Prompt Engineering

Large Langauge Models are trained on a large amount of data that is out there on the web. A lot of this data may or may not have been validated or proofread. Thus it becomes important to standardize the process of crafting prompts in order to derive accurate and efficient responses.

Here are a few pointers users can keep in mind:

- Make sure you keep the prompts relevant to the outputs you want to achieve.

- Gathering information from various sources to get the right data and contextual outputs.

- Fine-tuning the data with large datasets of documentaries, and historic events.

- Providing different formats and lengths of prompts to determine the most effective ones in guiding the model.

- Giving prompts with the type of expected output you want to achieve will give an accurate response.

- Describing the image visually and the text with its content provides vast information and achieves captioning.

- Make prompts relevant:

Keeping prompts relevant means that they should directly align with the specific outputs or responses you want from the NLP model. The more precise and on-topic your prompts are, the more likely you will receive meaningful and accurate results.

2. Gather diverse data sources:

To create effective prompts, it's essential to gather information from a variety of sources. This diverse dataset ensures that your prompts are informed by a broad range of knowledge and context, which can lead to more comprehensive and accurate responses from the model.

3. Fine-tune with historical data:

Utilising large datasets from documentaries, historical events, or other relevant sources can help fine-tune your prompts. This process involves training the model on specific data to improve its performance in generating contextually appropriate and accurate responses, especially when dealing with historical or specialised topics.

4. Experiment with different prompt formats and lengths:

Effective prompt engineering often involves experimentation. Providing prompts in various formats (e.g., questions, statements, commands) and lengths allows you to determine which style of prompt is most effective in guiding the model towards the desired output. Different contexts may require different prompt structures.

5. Specify expected output type:

Clearly, specifying the type of output you expect from the model is crucial. Whether you need a summary, a detailed explanation, a creative piece of writing, or any other specific format, stating this in your prompt helps the model understand your intent and generate a more accurate response.

6. Describe visual content and text content separately:

When dealing with tasks that involve both images and text, it's beneficial to provide separate descriptions for each. Describing the image visually and the accompanying text content with its context can give the model a wealth of information, making it more capable of generating accurate captions or descriptions.

Could Prompt Engineering be a career?

The launch of GPT-4 has pushed the boundaries as far as the applications of large language models are concerned. Forget GPT-4, even GPT-3.5 can get a piece of work done within the blink of an eye.

As such, its adoption is only going to go up across industries. The demand for prompt engineers who can get the work done in as less iterations as possible are going to increase. Highly trained Prompt Engineers will hold the key that taps into the possibilities of GPT-4 and its other future updates.

Anthropic, a San Francisco-based AI startup recently offered a salary in the range of $175,000 to $335,000 for the position of Prompt Engineer.

This job opening is enough to provide glimpses into the bright future Prompt Engineering holds as a career option.

So there you have it! With the advancements in large language models like GPT, the potential applications of prompt engineering are endless. It can revolutionize the way we interact with AI-powered systems.

And as AI evolves, so will the demand for skilled professionals who can work with these cutting-edge technologies.

Remember- “It will not be AI that takes away jobs. It will be the people who use it better”.

FAQs

What do prompt engineers do?

Prompt engineers design specific instructions or prompts to guide AI models like GPT in generating desired responses. They play a crucial role in tailoring AI outputs for various tasks, such as content generation, data analysis, and more.

What is prompt engineering salary?

The salary for prompt engineers can vary widely based on factors like experience, location, and the employer. In general, individuals with expertise in prompt engineering and AI can command competitive salaries, often exceeding the average for software engineers or data scientists.

Is prompt engineering difficult?

Prompt engineering can be challenging, but it's also rewarding. It requires a solid understanding of AI models, natural language processing, and problem-solving skills. However, with the right resources and dedication, anyone with an interest in AI can learn prompt engineering.

Who can learn prompt engineering?

Prompt engineering is open to individuals with a background in computer science, data science, or related fields. It's also accessible to those with a strong interest in AI and the willingness to learn. Online courses, tutorials, and resources are available for beginners and professionals alike to start learning prompt engineering.

What is prompt engineering, and why is it important in natural language processing?

Ans: Prompt engineering is the process of crafting specific instructions or queries to interact with a language model like GPT-3.5. It's essential because well-designed prompts can influence the quality and relevance of the model's responses.

How can I create effective prompts for a language model?

Ans: Creating effective prompts involves understanding the model's capabilities and limitations. Your instructions must be clear, concise, and specific while avoiding biases and ambiguities. Experimentation and iteration are key to refining prompts.

What role does prompt engineering play in bias mitigation and ethical AI?

Ans: Prompt engineering can help mitigate bias in AI by framing questions and instructions to encourage unbiased and fair responses. It plays a crucial role in promoting ethical AI practices and ensuring that AI systems do not propagate harmful stereotypes.

Are there best practices for prompt engineering to improve the efficiency and accuracy of AI models?

Ans: Yes, there are best practices for prompt engineering, such as using explicit context, specifying the format you want the answer in, asking the model to think step-by-step and providing clarifications when needed. These practices help improve the reliability of AI-generated content.